The emergence of micro-services architecture has transformed the landscape of modern application development and deployment.

Departing from the conventional monolithic approach, where applications are deployed as singular entities, micro-services dismantle the application into smaller, self-contained services. This modular approach enables the independent development, deployment, and scaling of services, thereby imbuing applications with agility, scalability, and resilience. In this article, we will delve into various methodologies for running micro-services, each offering unique advantages and considerations.

Read more: Exploring Various Approaches to Deploy MicroservicesSelf-Managed Deployments

This is the most basic way we can set up microservices and for an application. Self-managed deployments involve setting up and managing the infrastructure for running microservices independently. This typically involves provisioning virtual machines or containers, configuring networking, installing dependencies, and deploying micro-services manually or via automation tools.

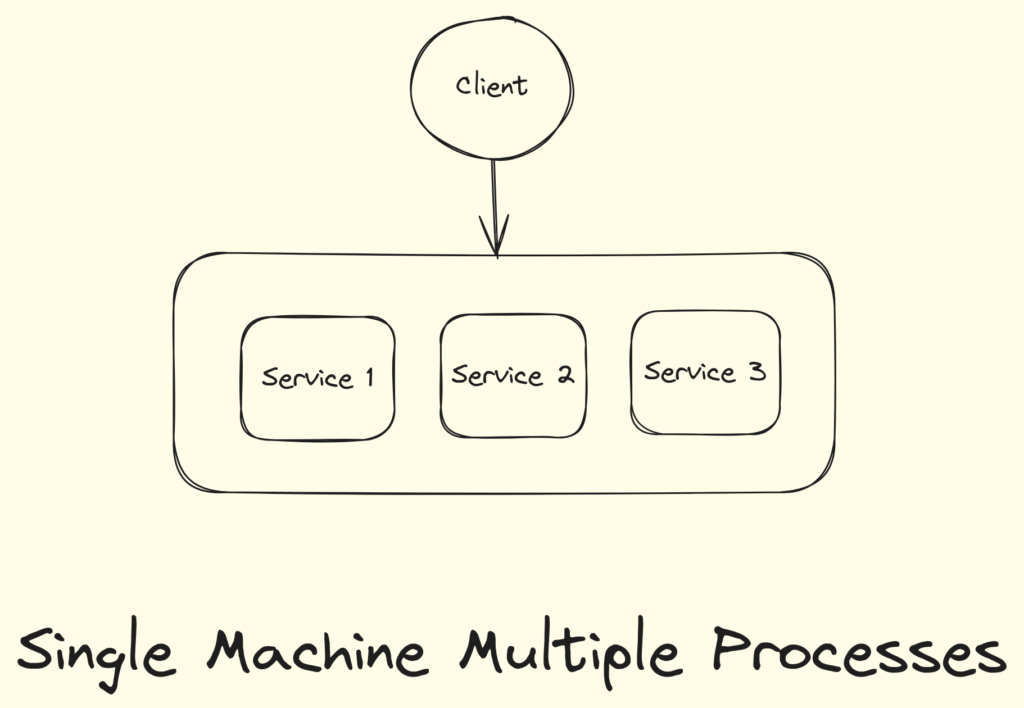

Single machine, multiple processes

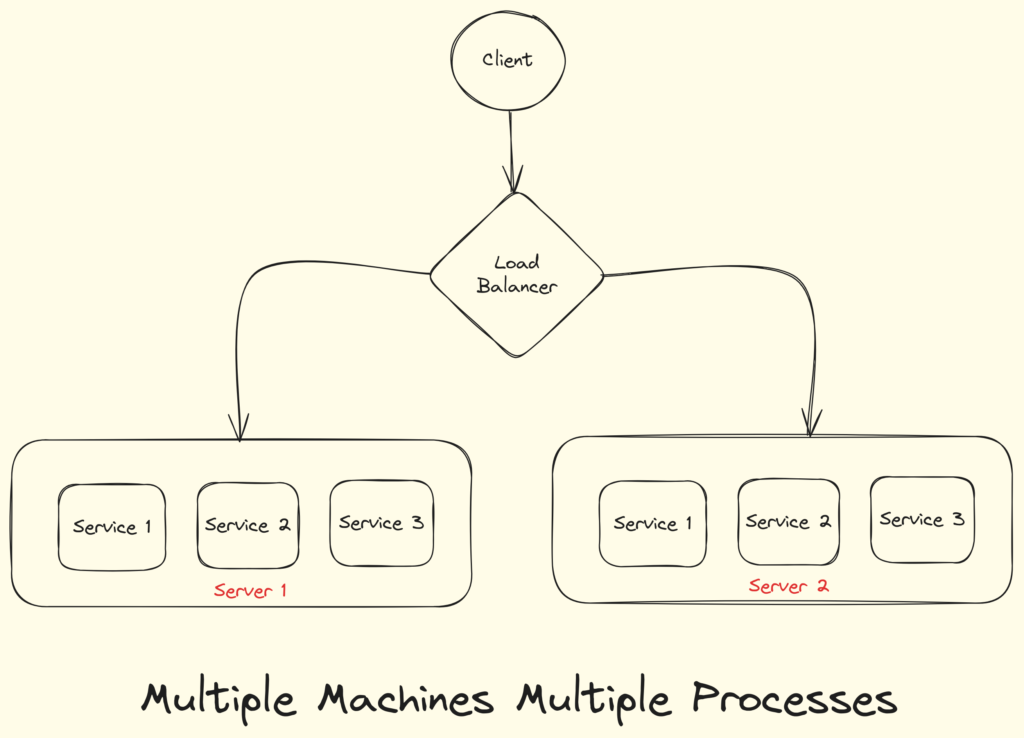

Multiple machines, multiple processes

Advantages:

- Full control over the deployment environment.

- Flexibility to customise infrastructure based on specific requirements.

- Efficient resource usage.

- Swift deployment of the services.

- Easy troubleshooting and Fixed Billing.

Disadvantages:

- Less or no isolation of the service instances for Multiple machines and processes.

- Requires expertise in infrastructure management.

- Increased operational overhead for maintenance and monitoring.

- Cannot limit the resources each instance uses.

- Scaling can be complex and time-consuming.

Containerization

Containers are a form of lightweight, portable, and self-contained environment that encapsulates an application and all its dependencies, including libraries, binaries, and configuration files, needed for it to run. Essentially, a container provides an isolated environment where an application can operate consistently across various computing environments, such as development laptops, testing environments, and production servers.

Containers can be executed in two manners: either directly on servers or through a managed service.

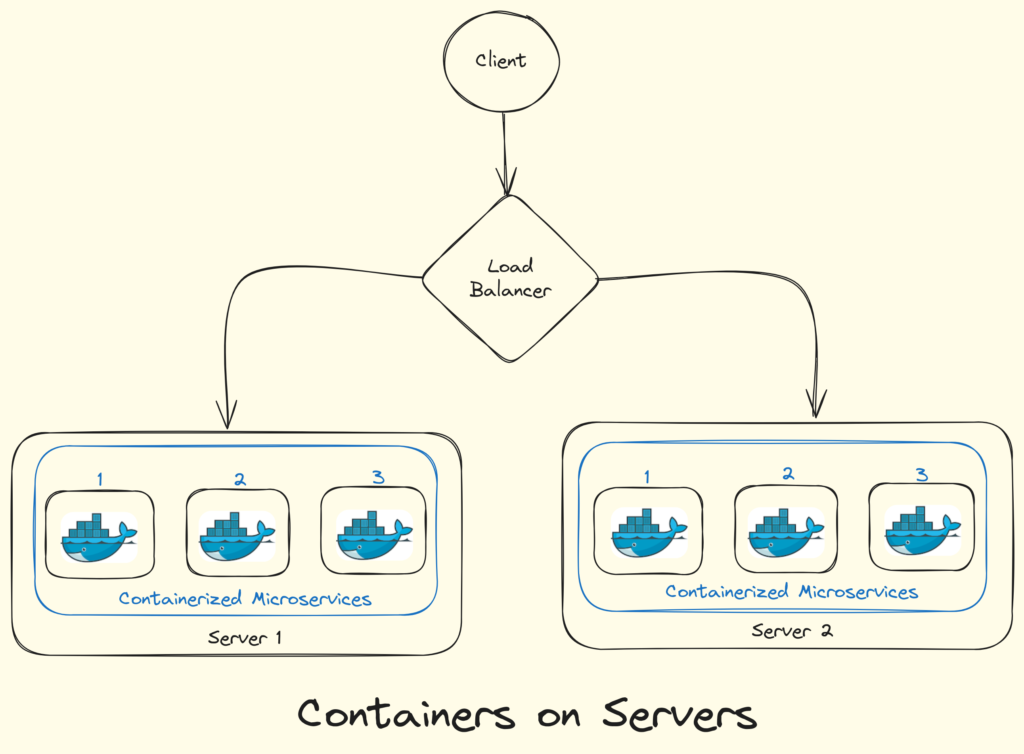

Containers on servers

Containers offer sufficient virtualization capabilities to execute software in isolation, replacing traditional processes and affording us enhanced flexibility and control. We have the capability to distribute the workload across numerous machines.

Serverless containers

Most companies aim to minimize reliance on servers whenever feasible.

Containers-as-a-Service solutions like AWS Fargate and Heroku enable the operation of containerized applications without the necessity of managing servers.

Simply by constructing the container image and directing it to the cloud provider, the provider handles the entirety of the process, from provisioning virtual machines to downloading, initiating, and overseeing the images. Additionally, these managed services often incorporate a built-in load balancer, alleviating one more concern from the user’s plate.

Advantages:

- Individual processes within containers operate in isolation from each other and the operating system. Each container possesses its own filesystem, thereby preventing dependency conflicts, unless volumes are excessively manipulated.

- It is possible to execute numerous instances of identical container images concurrently without encountering conflicts.

- Containers exhibit significantly reduced weight compared to virtual machines because they obviate the necessity of booting an entire operating system.

- By imposing CPU and memory constraints on containers, we prevent them from adversely affecting server stability.

Disadvantages:

- Cloud vendors supply and manage the majority of the infrastructure.

- Managed services enforce CPU and memory restrictions that are non-negotiable.

- If the managed service does not provide the required functionality, we are left without recourse.

- Containers, unlike VMs, do not offer the same level of security since they share the kernel of the host OS with one another.

- Containers are usually deployed within an infrastructure model featuring per-VM pricing. You may incur additional expenses by over-provisioning VMs to accommodate fluctuations in load.

Orchestrators

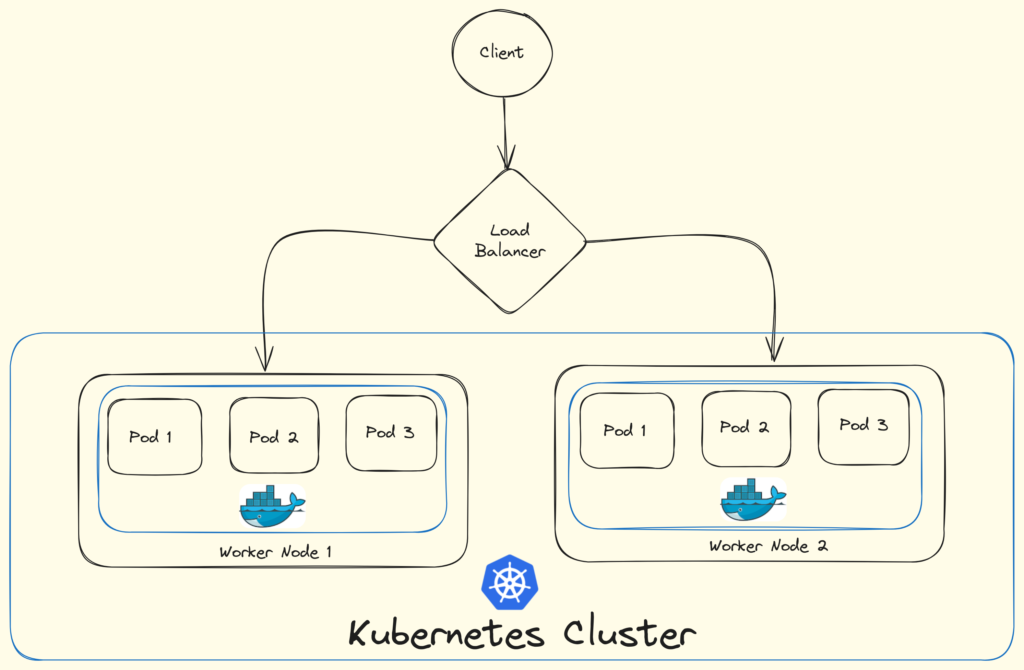

Orchestration platforms are tailored solutions designed to distribute containerized workloads across a cluster of servers. One of the most popular orchestrators is Kubernetes, an open-source project initiated by Google and now overseen by the Cloud Native Computing Foundation.

Orchestrators offer a comprehensive array of networking capabilities, including routing, security, load balancing, and logging.

Kubernetes is supported by all major cloud providers and has become a popular platform for deploying microservices.

Advantages:

- Deploy and scale software faster.

- Lower cloud hosting costs.

- Minimise errors and security risks.

- Centralised management.

Disadvantages:

- Orchestrators have a steep learning curve.

- Kubernetes maintenance demands expertise, but managed clusters from cloud vendors simplify administration.

- Handling Kubernetes involves learning and troubleshooting deployments, slowing productivity at the early stage.

Serverless Computing

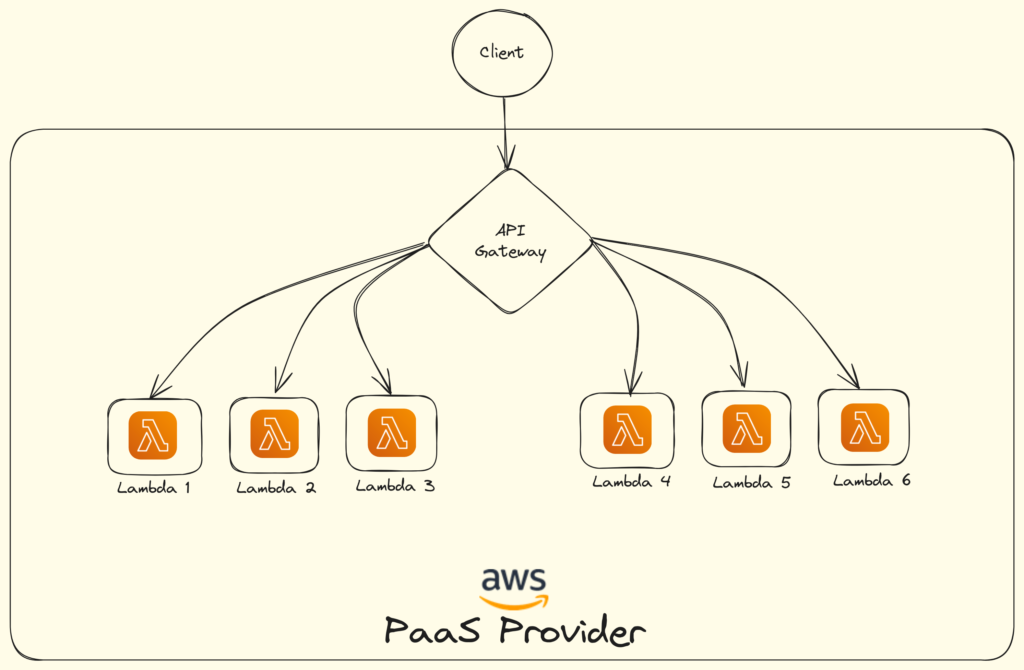

Serverless solutions such as AWS Lambda and Google Cloud Functions manage all the underlying infrastructure intricacies necessary for scalable and resilient services, allowing us to concentrate solely on coding.

Rather than dealing with servers, processes, or containers, we leverage the cloud to execute code as needed.

AWS Lambda is a good example of serverless deployment technology, supporting various technologies.

To deploy a microservice, it can be packaged as a ZIP file and uploaded to AWS Lambda, along with metadata specifying the function name invoked to handle requests (referred to as an event). It automatically scales instances of the microservice as needed to accommodate incoming requests, with billing based on usage metrics like time and memory consumption.

The concept of developers and organizations being relieved from concerns about servers, virtual machines, or containers holds significant advantage.

Advantages:

- Functions can be deployed instantly without the need for compiling or building container images.

- Auto Scalability. The cloud service provider dynamically scales resources to meet demand.

- You pay only for what you use, with no charges incurred during periods of low demand.

Disadvantages:

- Rarely accessed functions may experience delays in starting due to cloud providers shutting down resources associated with inactive functions.

- Cannot be long running processes as they have restrictions.

- Although the providers support almost all the languages, there might be some use cases where you are forced to use another language due to provider limitation.

- Usage-based billing makes it challenging to anticipate monthly expenses, with sudden spikes in usage leading to unexpected bills, unless there are billing alerts and other billing services that provide the required information.

Hybrid Approaches

Hybrid approaches combine elements of different deployment strategies to leverage their respective strengths. For example, organizations might use Kubernetes for container orchestration while deploying serverless functions for specific use cases within the same application.

Advantages:

- Flexibility to tailor deployment strategies based on application requirements.

- Optimized resource utilization and cost efficiency.

- Reduced vendor lock-in by leveraging multiple platforms and tools.

Disadvantages:

- Complexity in managing and integrating multiple deployment strategies.

- Potential overhead in maintaining compatibility and consistency across environments.

- Increased learning curve and operational complexity for teams.

Conclusion

Deploying microservices means making smart choices about how to deploy them to fit your organization’s goals, tech needs, and team skills. Whether you go for managed setups, container orchestration systems, serverless frameworks, managed PaaS solutions, or hybrid methodologies, each choice introduces distinct advantages and complexities.

Understanding the pros, cons, and features of each method helps you use microservices to build apps that grow, stay strong, and adapt to change. It’s vital to keep reviewing and tweaking your deployment plans to match new tech and business needs.